AI-Based Services Design Framework

Defining step-by-step process and new tools for the conscious tech adoption.

In recent years, the emergence of AI and automation algorithms has dramatically reshaped the service landscape. The advent of generative AI has further accelerated this trend. But adopting AI consciously is one of the biggest challenges for organizations today. What’s missing is a way to step back, ask the right questions, and make choices that are both useful and responsible.

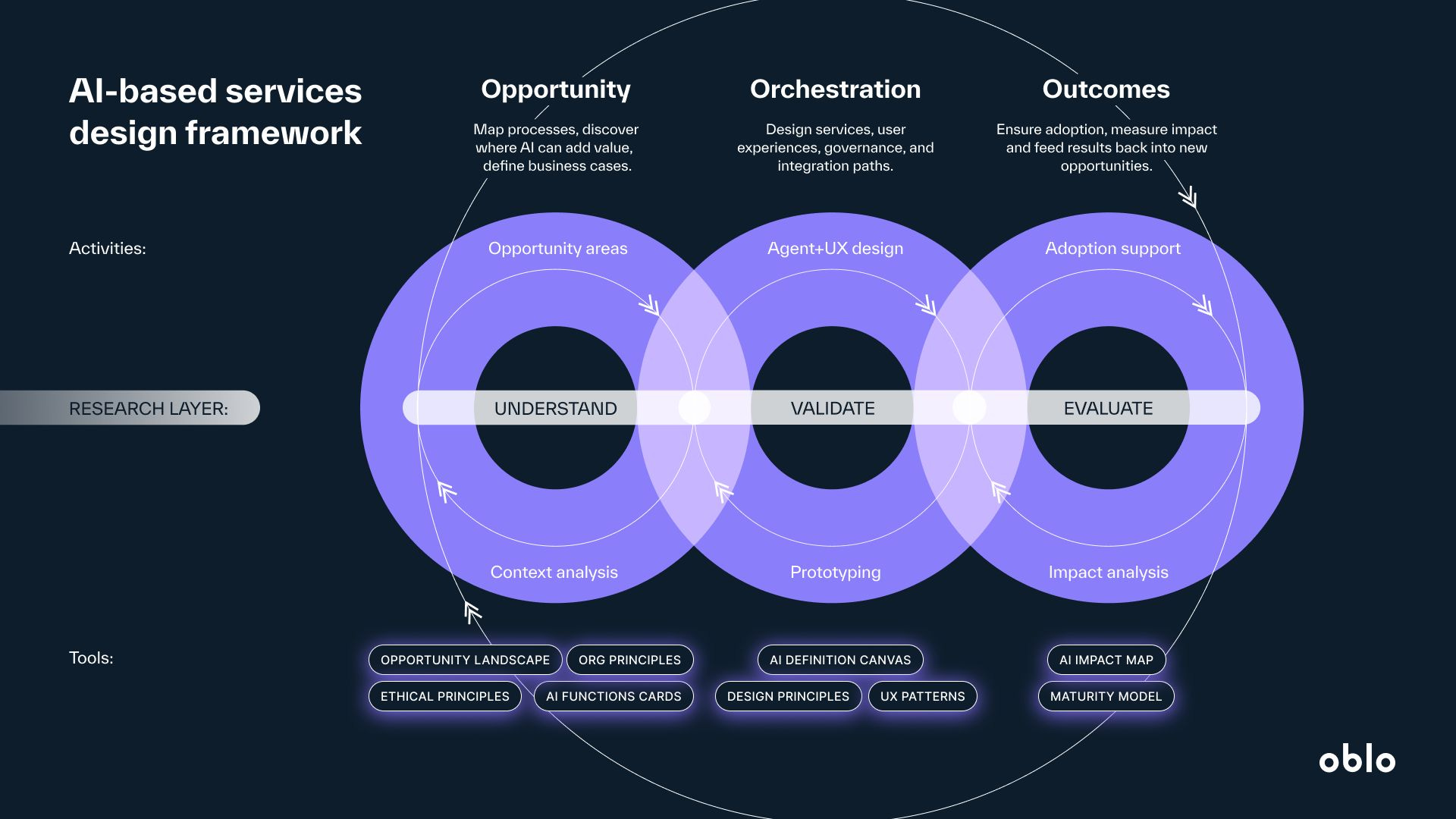

That’s why at oblo we developed the Triple-O Framework — a method to think about AI in projects with purpose and awareness starting from the definition of opportunities to the measurements of implementation impacts.

1. Opportunities: Turning Hype into Strategic Choices

The first challenge in working with AI is not how to build it, but where it makes sense. Organizations often leap toward the most visible trend — generative AI today — while missing other opportunities where automation, assistance, or augmentation could transform their operations or services more sustainably.

The Opportunities phase is about mapping the current state of the service and identifying pain points, bottlenecks, and potential leverage points where AI could intervene. Here, research becomes more important than ever: AI investments are costly and complex, and finding the right use cases means distinguishing real value from “cool features.”

To support this exploration, we use tools such as the AI Landscape Canvas and AI Functionality Cards. These allow teams to scan across the full range of possibilities — from back-office operations to customer-facing journeys, from automating repetitive tasks to augmenting decision-making. By generating a broad spectrum of ideas and then filtering them by impact and effort, organizations can prioritize interventions that are feasible, strategic, and aligned with their values.

This step is not purely analytical. It is also cultural. Like the adoption of digital technologies in the past, embracing AI requires organizations to reflect on their identity and readiness: Are we prepared to let go of some control? Do we understand what we want to delegate, and what we must protect as uniquely human?

Opportunities are not just technological openings; they are strategic choices about the future of work and value creation.

This step is exactly the one around which our current AI Bootcamps are build helping people to learn to think in depth about the value of AI application in their context.

2. Orchestration: Designing Human + AI Collaboration

Once promising opportunities are identified, the next step is orchestration — designing the way AI integrates into services, organizations, and human experiences.

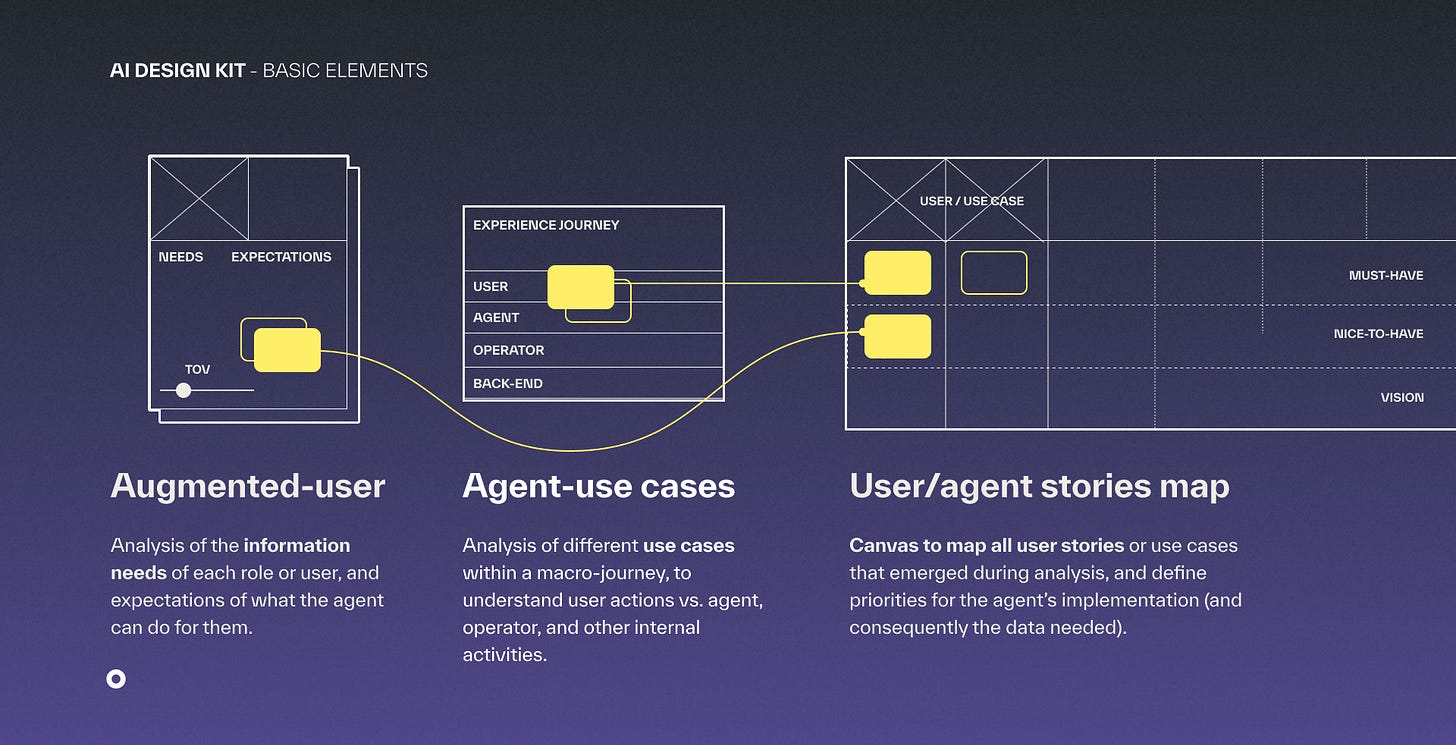

This step is not simply about designing an interface or plugging in a model. It is about designing relationships: between humans and their AI agents, between AI systems and organizations, and between organizations and society.

Designing the experiences

AI creates new dynamics between humans and machines. Users may want to delegate certain tasks while retaining control over others. The relationship may be short-term (a one-off recommendation) or long-term (a trusted advisor). It may be invisible (AI as presence), functional (AI as tool), or relational (AI as entity). Designing these dynamics requires conscious choices about control, trust, memory, and proactivity.

To support this, we developed AI Design Principles — guidelines such as Affordance, Control, Adaptability, Consistency, and Comfort — that help define the “experience pillars” of an AI service. These principles act as safeguards, ensuring AI enhances rather than undermines human agency.

Beyond principles, orchestration means mapping the human+agent journey:

Who does what, when?

What does the AI handle autonomously, and where does the human intervene?

How does the relationship evolve over time, and how do organizational values translate into this design?

Read more about new AI design grammar and principles.

Transforming organizations

Orchestration is not just about user-facing design. Adopting AI requires organizational adaptation: shifting roles, introducing governance mechanisms, and rethinking workflows. AI does not remain confined to the interface; it reshapes how teams collaborate, how decisions are made, and how cultural values are enacted in daily operations. This cultural transformation is as important as technical deployment.

AI adoption forces organizations to revisit fundamental questions:

What do we want to delegate to machines, and what must remain human?

How do we balance efficiency with empathy?

Which new skills, safeguards, or rituals are needed to sustain trust?

3. Outcomes: Sustaining Impact and Responsibility

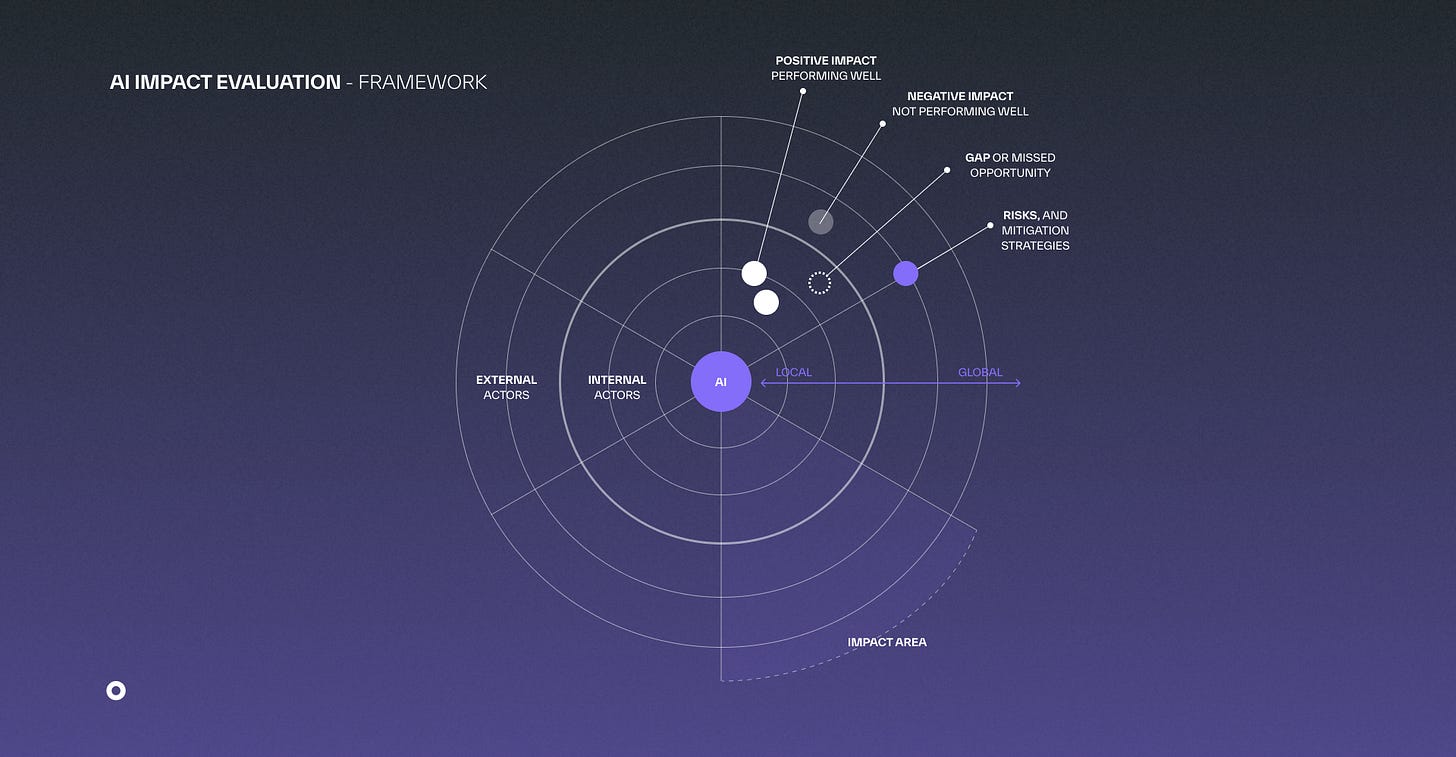

The third step of the framework focuses on outcomes: what results does AI actually deliver, and how can we ensure they remain aligned with organizational values and societal responsibility over time?

AI systems are not static. They evolve with new data, sometimes drifting into unintended behaviors. Without careful monitoring, AI can entrench bias, manipulate users, or create dependencies. Designers and organizations therefore need mechanisms to evaluate, adapt, and govern AI beyond launch.

We propose tools like the AI Impact Map to help teams define impact goals and monitoring protocols early in the process. By establishing metrics during design and prototyping, organizations can track whether AI is delivering value — not just in efficiency terms, but across broader ESG dimensions: environmental footprint, social impact, and governance implications.

Several areas are critical here:

Continuous validation: AI must be monitored over time to detect drifts, errors, or shifts in behavior.

Bias emergence: biases may not only come from training data but also surface after deployment in real contexts.

Extended impacts: beyond immediate users, AI has environmental footprints (energy, water), labor implications (hidden digital work), and systemic social consequences.

User well-being: design must protect long-term psychological health, preventing manipulative patterns or unhealthy dependence.

By involving diverse stakeholders and considering both direct and indirect effects, designers can broaden the lens from immediate utility to systemic impact. This makes AI adoption more accountable, resilient, and aligned with broader sustainability goals across social, environmental, and governance dimensions.

AI adoption is not only a technological step; it is a cultural transformation. For designers and organizations alike, the challenge is not to keep pace with AI, but to shape it consciously — so that future services are not only efficient, but also sustainable, trustworthy, and deeply human. This framework synthesizes years of our work on AI-related projects and tools that we have used and developed ad-hoc in different contexts.

Stay tuned for more content about it, or check out our Bootcamp to learn more in a hands-on mode.